Seminar: Modular deep learning

| Speaker: Edoardo Ponti, Lecturer at University of Edinburgh | |

| Where: Zoom (Zoom login required) | |

| When: June 9th, 12:00 - 13:00 |

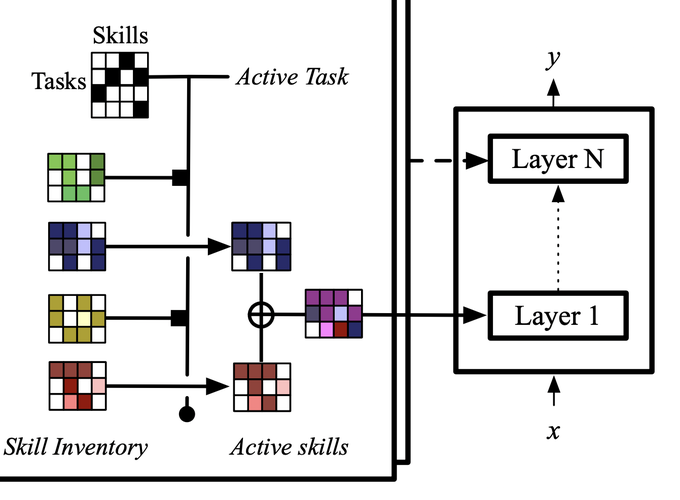

Transfer learning has recently become the dominant paradigm of machine learning. Pre-trained models fine-tuned for downstream tasks achieve better performance with fewer labelled examples. Nonetheless, it remains unclear how to develop models that specialise towards multiple tasks without incurring negative interference and that generalise systematically to non-identically distributed tasks. Modular deep learning has emerged as a promising solution to these challenges. In this framework, units of computation are often implemented as autonomous parameter-efficient modules. Information is conditionally routed to a subset of modules and subsequently aggregated.

These properties enable positive transfer and systematic generalisation by separating computation from routing and updating modules locally. In this talk, I will introduce a general framework for modular neural architectures, providing a unified view over several threads of research that evolved independently in the scientific literature. In addition, I will provide concrete examples of their applications: 1) cross-lingual transfer by recombining task-specific and language-specific task sub-networks; 2) knowledge-grounded text generation by Fisher-weighted addition of modules promoting positive behaviours (e.g. abstractiveness) or negation of modules promoting negative behaviours (e.g. hallucinations); 3) generalisation to new NLP and RL tasks by jointly learning to route information to a subset of modules and to specialise them towards specific skills (common sub-problems reoccurring across different tasks).

Speaker

Edoardo Maria Ponti is a Lecturer (≈ Assistant Professor) in Natural Language Processing at the University of Edinburgh, where he is part of the “Institute for Language, Cognition, and Computation” (ILCC), and an Affiliated Lecturer at the University of Cambridge. Previously, he was a visiting postdoctoral scholar at Stanford University and a postdoctoral fellow at Mila and McGill University in Montreal. In 2021, he obtained a PhD in computational linguistics from the University of Cambridge, St John’s College. His main research foci are modular deep learning, sample-efficient learning, faithful text generation, computational typology and multilingual NLP. His research earned him a Google Research Faculty Award and 2 Best Paper Awards at EMNLP 2021 and RepL4NLP 2019. He is a board member and co-founder of SIGTYP, the ACL special interest group for computational typology, and a scholar of the European Lab for Learning and Intelligent Systems (ELLIS). He is a (terrible) violinist and an aspiring practitioner of heroic viticulture.”