Thesis Proposals

Your master thesis can be an exciting time (and maybe the start of something more): in our lab we focus on selected topics that we believe will be crucial in the future of neural networks, while trying to apply everything we know to existing real-world applications. This is also done in partnership with other scientific and industrial research groups. Here is a summary of everything I am excited about at the moment (in five points): 👇🏻

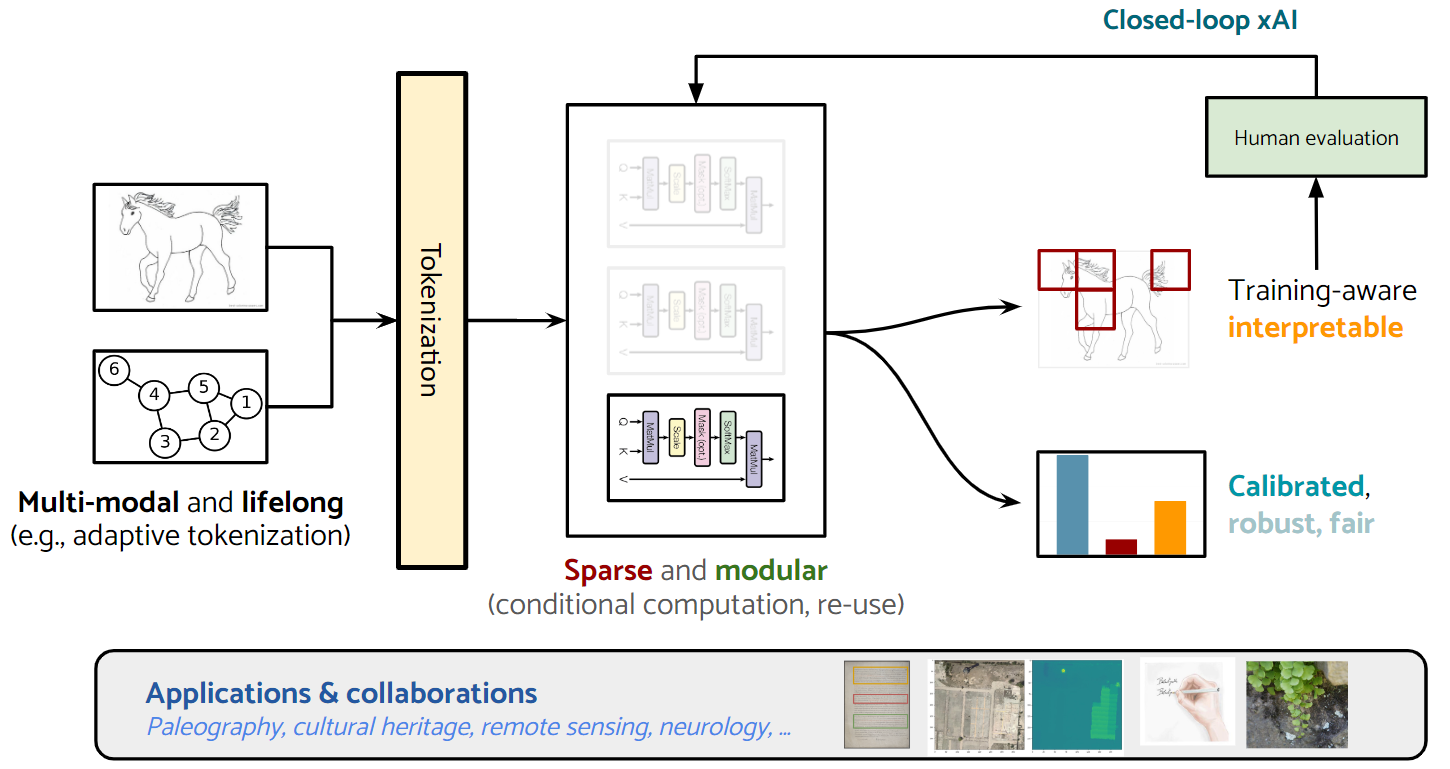

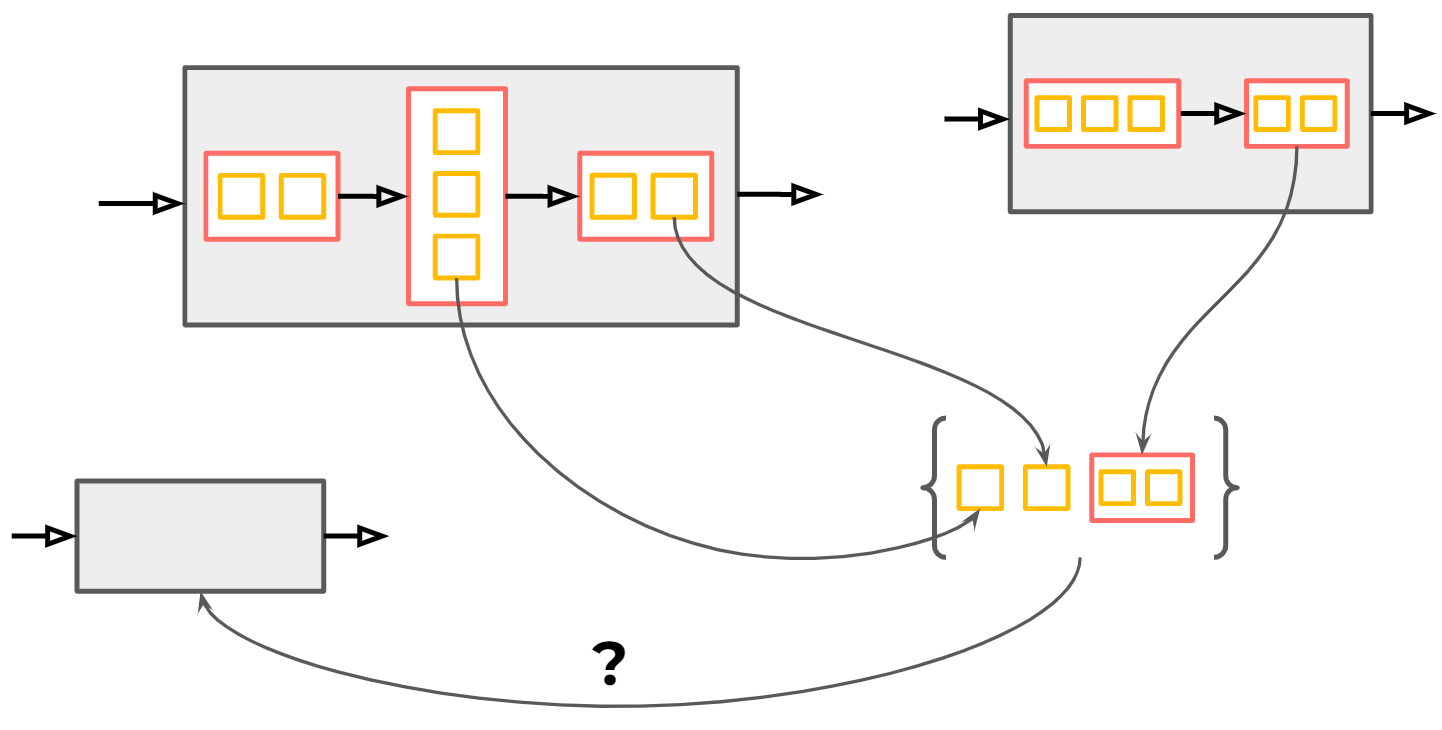

1. Neural networks will be modular, dynamic, efficient

A modular neural network can decide dynamically (for every input) which of its components it should activate.1 There are many ideas to achieve this, ranging from conditional computation to mixture-of-experts,2 early exiting, and more. This can dramatically change the way we design and use networks, e.g.:

-

First, activating only the required components makes the network faster and more efficient, which has significant implications for inference on low-latency and constrained environments.

-

Second, selecting components is fundamentally a discrete selection problem,3 opening up new avenues of research for differential sampling of discrete structures (e.g., imagine learning to subsample only which components of the input are relevant for the current task).

-

Third, modularity can allow us to encapsulate information and reuse components and blocks across different architectures! While still in its infancy, this can revolutionize the way we train and use these models.

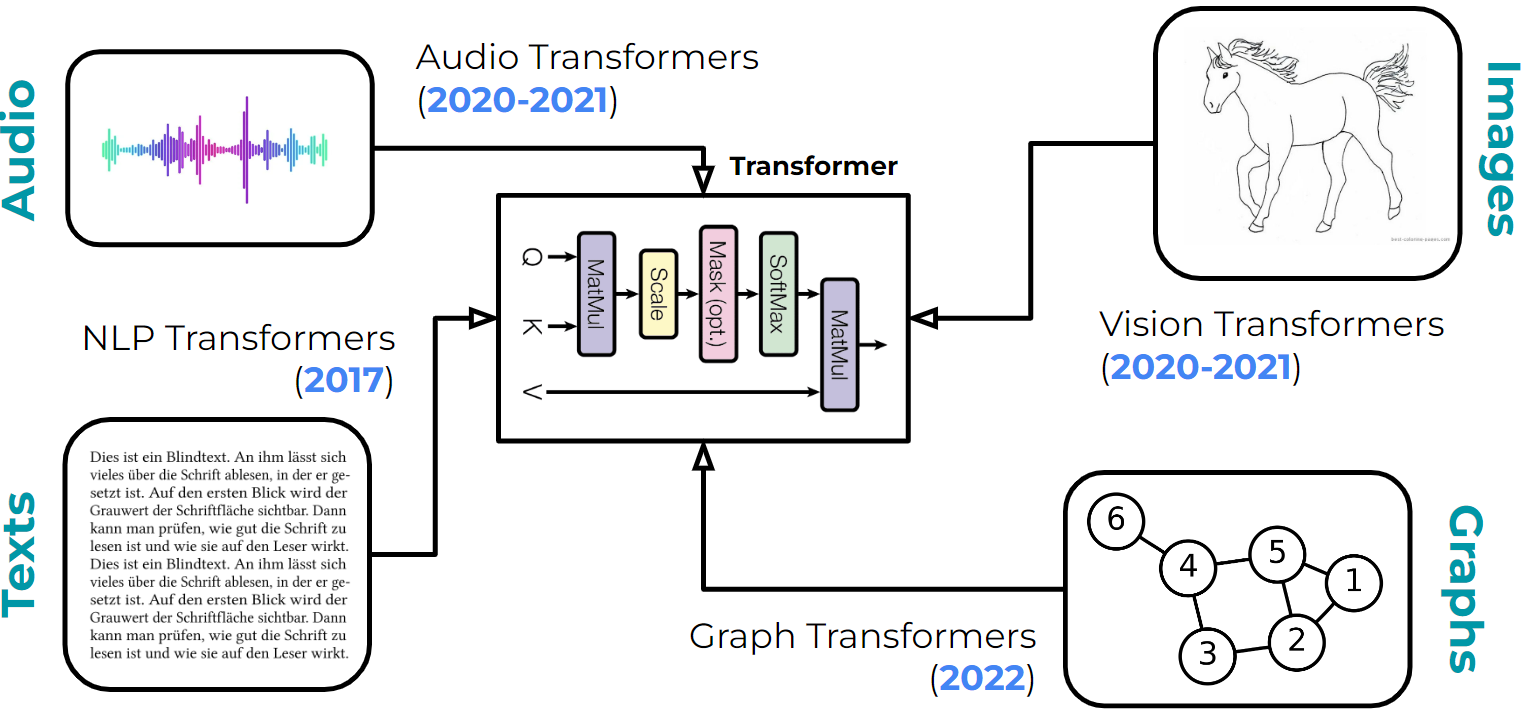

2. 🗫 Learning will be multimodal and lifelong

In our lab, we have developed customized architectures for many types of data, from graphs to point clouds, speech, music, etc. Recently, this has all changed with transformers, which can process all sorts of inputs through an appropriate tokenization and positional embeddings.

This opens up new and exciting questions, such as:

- How do you tokenize / how do you design positional embeddings for complex structures such as point clouds or higher-order graphs? For example, how do you process a molecule with three-way or four-way relations between atoms with a transformer?

- How can you tokenize and integrate multi-modal information inside a model, e.g., object-centric information on an image?

- What is the optimal way to continuously learn information, not only when tasks are changing but when domains are changing? Can we continuously train networks to process data from heterogeneous, multi-modal domains?

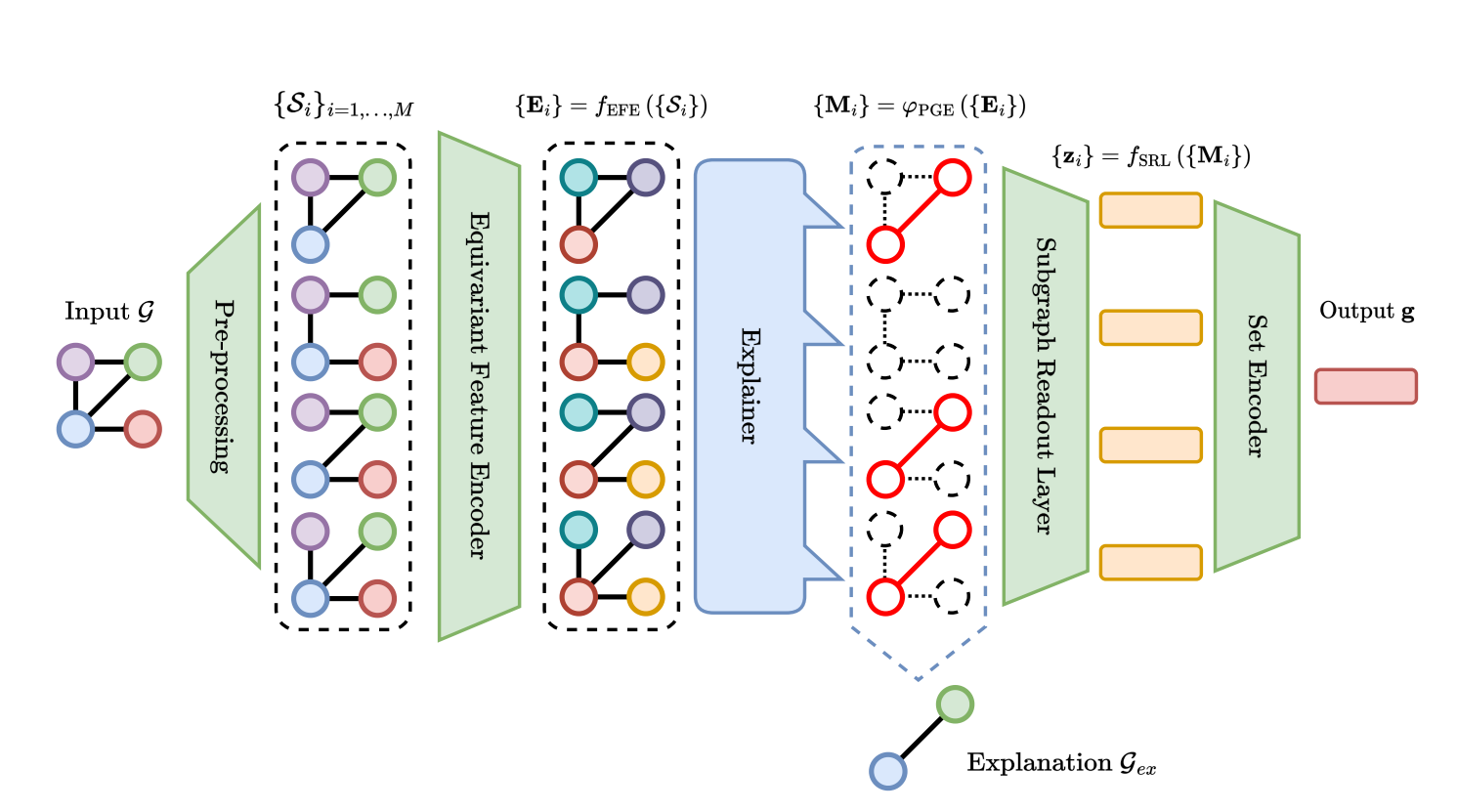

3. 🔬 Explainability will power countless scientific discoveries

Explainability is the process of providing information about the prediction of a given network, e.g., through input saliency or data attribution. Over the last years we have participated in several scientific and engineering collaborations (e.g., to analyze handwriting in human aging or Latin manuscripts), and we know that the current generation of xAI methods is too limited to provide real insights and understanding on a domain to scientists and engineers.

We envision a completely different future, where networks automatically provide some human understandable explanations about their predictions through, e.g., concept-based models.4 Importantly, a system that can learn what parts of the input or what relations are important can also be steered to a desired behaviour, allowing scientist to interact in a two-way feedback with the models to validate or correct its findings.5 This requires new techniques to design and train the models, and new improvements in UI and experiment design to test them.

4. 🦾 We will need more robustness and fairness

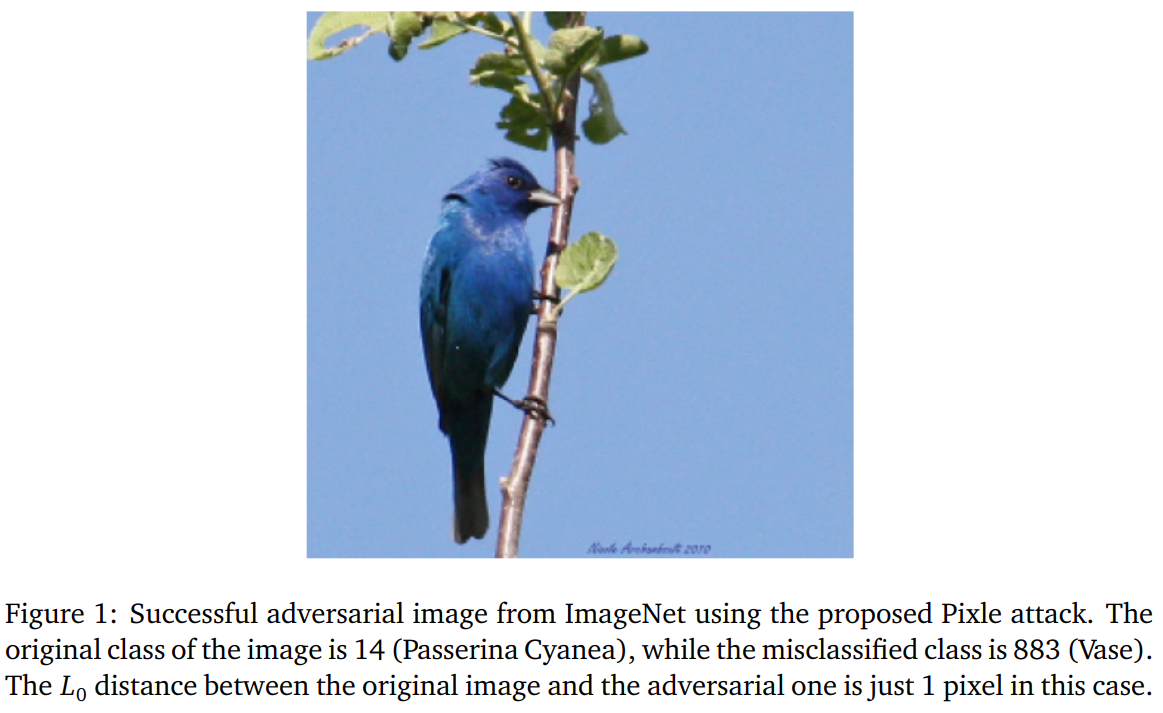

In the real world, accuracy or inference speed are just the beginning, and apart from explainability, there are many metrics of interest that a model should satisfy. Over the last years, we have experimented repeatedly with new fairness algorithms for, e.g., link prediction in social networks, or new adversarial attacks and defences on different types of data. A lot remains to be done, however, in topics ranging from robustness to calibration, domain adaptability, and many more.

5. Applications and collaborations

This is just a quick snapshot of everything we are interested about. We have a number of ongoing collaborations with national and international institutions, as long as active research projects and cooperations. Contact us anytime!

References

-

Pfeiffer, J., Ruder, S., Vulić, I. and Ponti, E.M., 2023. Modular deep learning. arXiv preprint arXiv:2302.11529. ↩

-

MoEs are most commonly associated with scaling transformer models to billions or trillions of parameters (Riquelme et al., 2021), but their modular prior can be useful in many other contexts. ↩

-

Niculae, V., et al., 2023. Discrete Latent Structure in Neural Networks. arXiv preprint arXiv:2301.07473. ↩

-

Zhang, et al., 2022, June. ProtGNN: Towards self-explaining graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 36, No. 8, pp. 9127-9135). ↩

-

Davies, A., et al., 2021. Advancing mathematics by guiding human intuition with AI. Nature, 600(7887), pp.70-74. ↩