Seminar: Data representations in deep generative modelling

| Speaker: Kamil Deja, PhD Student at Warsaw University of Technology | |

| Where: DIET Department, second floor, aula Lettura (Via Eudossiana 18), Zoom (Zoom login required) | |

| When: June 16th, 12:00 - 13:00 |

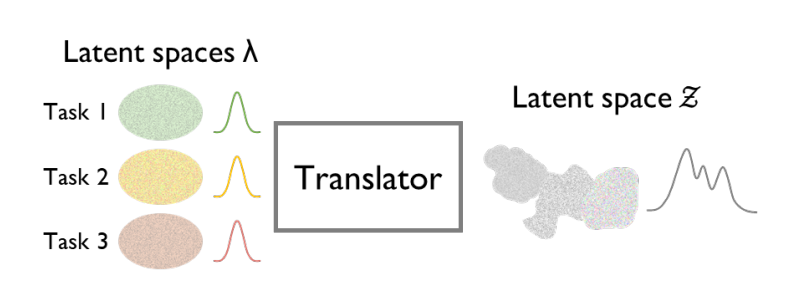

In this talk, I will overview our recent works in deep generative modeling from the perspective of data representations. I will show how state-of-the-art diffusion models (DDGM) build internal data representations and how we can befit from them for other tasks. I will present our analysis of the diffusion process and show how we can decompose it to the generative part responsible for creating new data features and the second denoising component. On top of this analysis, I will introduce our joint-diffusion method, where we propose a single parametrization for the generative and discriminative tasks. Finally, I will show how we can benefit from generative models in continual learning problem beyond the most popular generative replay methods.

References

[1] Deja, K., Kuzina, A., Trzcinski, T., & Tomczak, J. (2022). On analyzing generative and denoising capabilities of diffusion-based deep generative models. Advances in Neural Information Processing Systems, 35, 26218-26229.

[2] Deja, K., Trzcinski, T., & Tomczak, J. M. (2023). Learning Data Representations with Joint Diffusion Models. arXiv preprint arXiv:2301.13622.

[3] Deja, K., Wawrzyński, P., Masarczyk, W., Marczak, D., & Trzciński, T. (2022). Multiband VAE: Latent Space Alignment for Knowledge Consolidation in Continual Learning. IJCAI-22.

Speaker

Kamil Deja is a Ph.D. student at Warsaw University of Technology and a researcher at IDEAS NCBR, a publicly-funded Polish Center for AI. He was a Visiting Researcher at Vrije University of Amsterdam in 2022 and a science intern at Amazon Alexa in 2021 and 2022. Since 2018 he has been a member of the ALICE Collaboration at CERN. His research focuses on Generative Modelling and its application to continual learning. He published his works in major ML journals and conferences, including NeurIPS, IJCAI, Interspeech and ICASSP.